One of the more contentious debates in the audio industry centers around digital audio resolution. Is CD-quality still good enough, or should all digital sound adhere to the higher-res options that are now available? Ok, spoiler alert—there is no definitive answer, something you’ll realize quickly enough if you delve onto the tons of material available. Experienced audio professionals—the top guys in their fields—make different choices when it comes to audio resolution, so it’s worth taking a brief look at some of the issues they’re presented with.

The (Very Simplified) Basics

The two benchmarks of digital audio quality are Sample Rate and Bit Resolution. Lower Sample Rate & Bit Resolution can mean a less-accurate numerical description of the (shape of the) audio wave, and that translates to reduced bandwidth and distortion. So, naturally, higher numbers are better—up to the limits of (human) perception. For the best balance between sound quality and system (computer) resources, we need to use a digital audio resolution that represents the audio with a level of accuracy that meets or slightly exceeds the limits of human auditory perception.

For years, those two benchmarks were standardized. CD’s standard 44.1kHz Sampling Rate, and the Post Production industry’s standard 48 kHz Sampling Rate, guarantee bandwidth up to 22.5k or 24k, respectively, exceeding human hearing, which barely makes it to ~20 kHz. And CD’s 16 bits of digital resolution provide ~ 96 dB of dynamic range, plus a vanishingly low noise floor, and though this doesn’t quite meet or exceed the limits of human hearing (~120 dB dynamic range), it eliminated most analog flaws (tape hiss, surface noise, wow & flutter). The very low-level digital (Quantization) distortion present below -90 dB was effectively done away with by clever engineering, via the use of Dither, making it, for all practical purposes, imperceptible, and “CD-Quality” was the gold standard for years.

Please Sir, May I Have Some More..

But if the original standard(s) met (at least perceptually) the theoretical requirements, why the call for higher resolutions? Is it really necessary to make use of the higher Bit Resolutions available currently—24 instead of 16 bits, and Sample Rates like 96 kHz, that are double (or quadruple) the original 44.1k and 48k standards, and which may negatively impact computer resources in bigger sessions? Will a 24/96 recording really sound that much better than 16/44.1... or will there even be any perceptible difference out in the real world? Let’s take a (very simplified) look at a couple of the relevant aspects of digital theory and implementation, to get a better handle on the question.

A Bit of This, a Bit of That

First off, the easy part—Bit Resolution. The one thing that everyone agrees on is that the 144 dB dynamic range of 24-bit audio is cleaner than 16-bit—it’s easily demonstrable and provable. The inevitable low-level Quantization distortion, which was (theoretically) just above the limit of human perception (the 120 dB dynamic range of human hearing), is now comfortably below it, and completely inaudible.

However, the difference is not as great as you might expect—people who remember the dramatic improvement in quality going from 8- to 16-bit resolution shouldn’t expect to hear anywhere near as much, if any, improvement going from 16 to 24. The reason is that 16 bits, especially with Dither applied, gets us so close to the limits of human perception (as far as low-level digital distortion) that, theory aside, other than a slight increase in perceived clarity, the extra resolution is often mostly unnoticeable in practice.

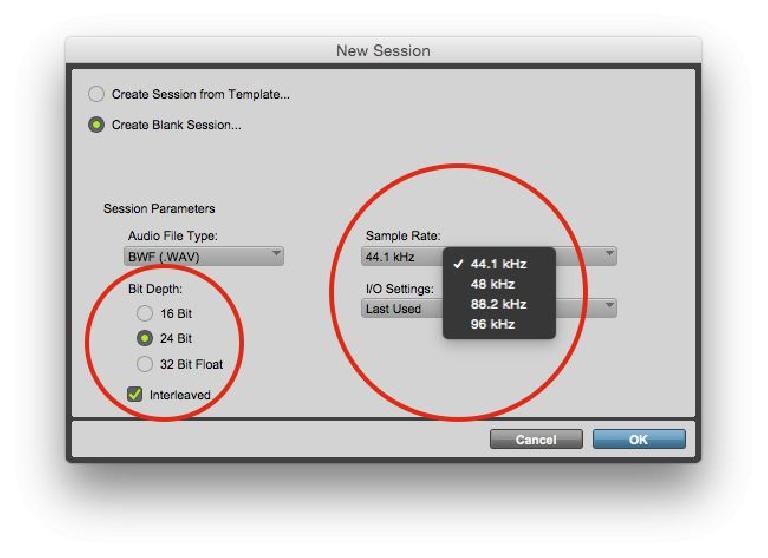

And yet, everybody (at least all pros) record 24 bit! Why? Well, I guess the answer is, why not? 24-bit resolution doesn’t really impact on system performance with any modern computer, and the files, while 50% larger, are still relatively tiny compared with the HD capacities we take for granted these days. And the extra dynamic range provides a little more leeway when it comes to safe recording levels. So, while the improvement may be subtle, it is there (mostly in the form of slightly greater clarity and detail in a busy mix), and it’s good practice to use it.

But what about all the buzz regarding “32-bit resolution”? Well, that’s an apples and oranges kinda thing. All DAWs operate internally at 32-bit (floating-point) resolution—it doesn’t matter what res your files are. This provides virtually unlimited headroom, for the constant calculations (technically, re-quantizations) that are made as you process the audio in editing and mixing. If those recalculations were done at the same resolution as the final digital file (16 or even 24 bits), the inevitable digital grit would eventually accumulate and become audible, but at the higher internal resolution, that will never happen. But when the work is done, and the file or mix is bounced down, then the standard maximum res should be 24-bit (except when prepping for CD release). There may be some workflow situations where actual 32-bit res files could be useful, but for most normal situations, 24-bit is the new standard.

Sample This

When it comes to the available higher Sampling Rates (88.2k, 96k, 176.4k, 192k), there’s less agreement. At the extremes, some feel they add unmistakable air and clarity, while others feel they’re mostly just marketing hype. Much of the argument has to do with practice and perception—how digital circuits actually work, and the limits of what human hearing can actually perceive. Let’s start with the Sampling process itself...

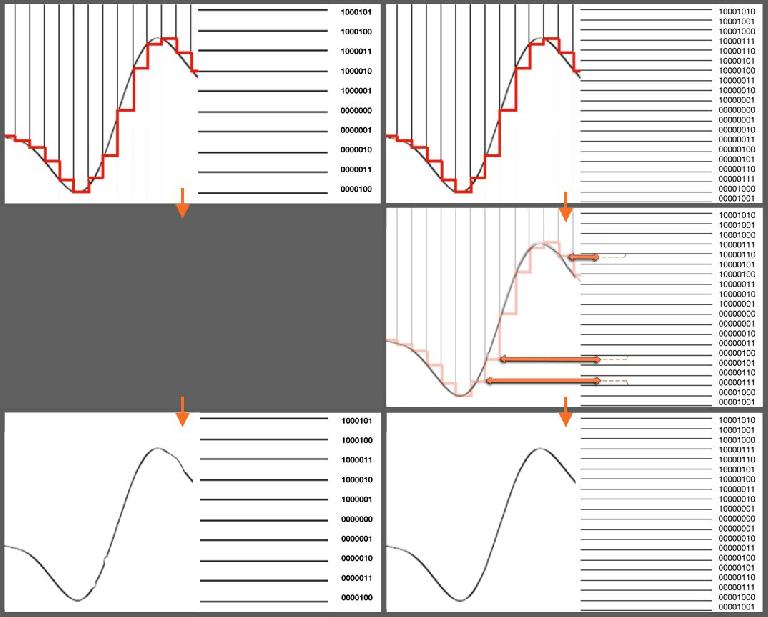

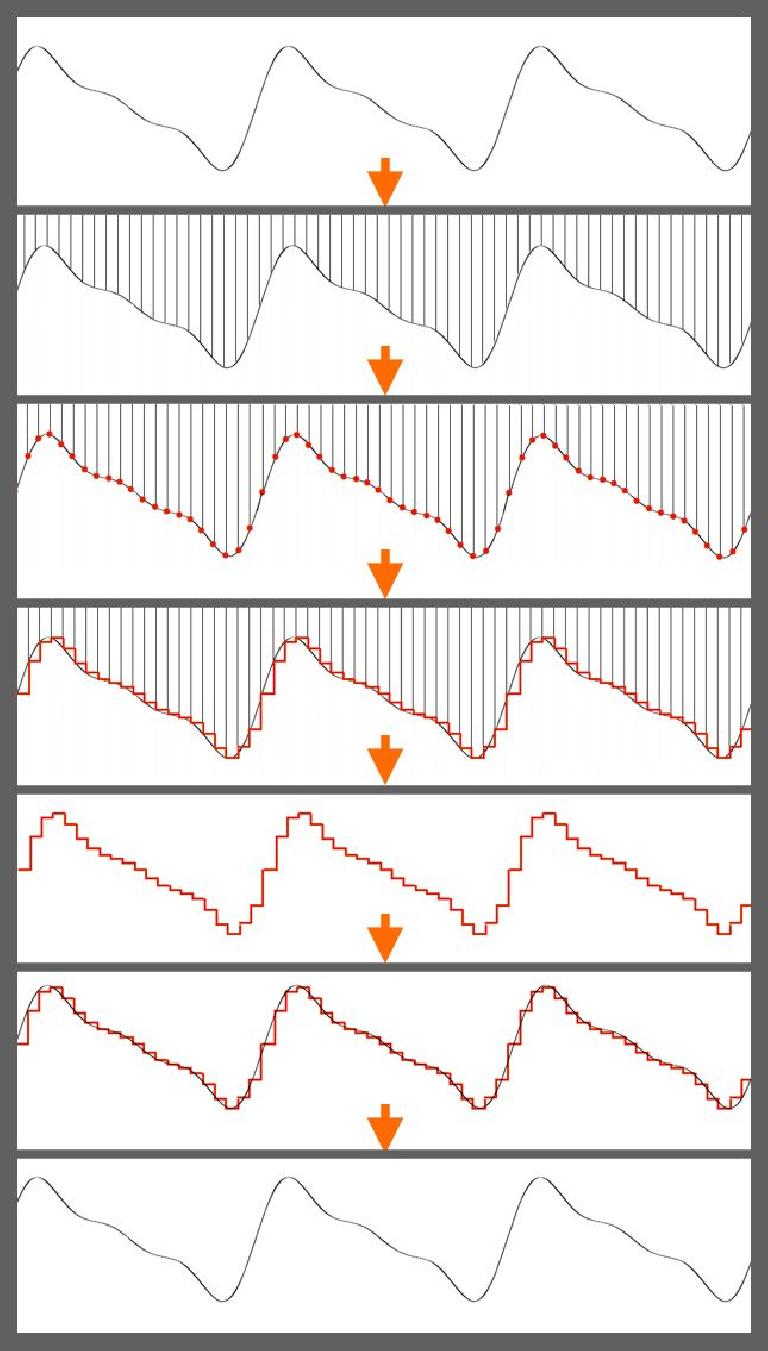

Digital audio theory is actually quite elegant—it really does work! Despite many peoples’ misconceptions, sampling is a lossless process—if done correctly. As long as the famous Nyquist Rule is followed (Sample Rate must be ≥2x the highest audio frequency), and despite the stair-stepped appearance of (those diagrams of) the Sampled wave, a digital recording does not actually toss away the information (the smooth changes in the wave) between the samples, and it does actually preserve the smooth shape of the original wave—up to half the Sampling Frequency. As long as that bandwidth exceeds the capability of human hearing, Sampling has captured everything needed for accurate recording and playback.

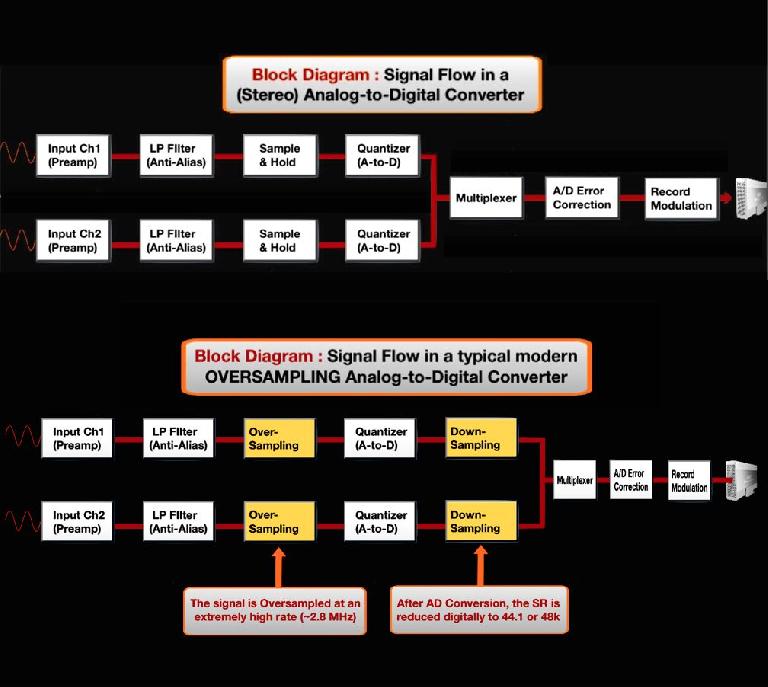

But to accomplish this without an audible artifact—a nasty high-frequency ringing called Aliasing—a special analog lowpass filter is needed during recording, just prior to sampling—the infamous steep “brickwall” (anti-aliasing) filter, set at half the Sample Rate. In the past, these filters caused audio degradation (unwanted phase shift), since they acted on frequencies very close to the highest perceptible audio frequencies. It plagued the earliest CD recordings, and many people know this—they also know that if a higher Sample Rate is used, then any potentially degrading brickwall filter effect can also be moved up to a much higher frequency, well away from perceptible audio, so there won’t be any negative effect. Some people might think that this is a good reason to use a higher Sampling Rate, but, as it turns out, it isn’t!

Modern digital converters have, for years now, actually sampled at a much higher rates, long since eliminating the need for those troublesome brickwall filters. But the Sample Rate of the resulting digital audio file may still be 44.1k or 48k, because the higher audio frequencies sampled—above 20k, the upper limit of human hearing—are immediately discarded digitally, once the conversion is done, with little or no audible consequence from the digital filtering that accomplishes this.

But when you make a 96k recording, you’re not just avoiding brickwall filter problems, you’re actually keeping audio frequencies up to 48 kHz, well above hearing range, making files twice the size, and potentially taxing system resources. So if this isn’t necessary to avoid brickwall filter issues, then is there an audible benefit to it?

The Ultrasonic Conundrum

Well, this is one of those areas where good people “beg to differ”—actually, they often argue quite aggressively (at least with what passes for aggression at an AES conference). Some feel that, since many instruments and acoustic sounds do produce overtones and harmonics in the higher, ultrasonic ranges, that capturing those frequencies, even though they are directly inaudible to us, will preserve their acoustic interaction with other (audible) frequencies in the recording space, which may be perceptible on a subtle level, as a greater sense of “air” and openness. Others disagree—they feel that since, to be audible, any results of such interaction must be below 20 kHz, they’ll be captured anyway, and any interference from ultrasonics once the files are recorded won’t have that same potentially beneficial three-dimensional acoustic interaction. On the con side, the naysayers also point out that most mics and speakers aren’t usually even capable of reproducing these frequencies, so they feel the whole exercise may be pointless. However, again on the pro side, it’s possible that the circuitry in some high-end hi-res converters is simply better-designed and executed, and that may a contributing factor to the perceived sense of greater air and openness that many people report when using those components for high-SR recording.

Golden Ears..?

Test measurements can’t really settle this debate—it comes down to perception, and that’s a real hornet’s nest. Those who hear obvious or significant differences at higher Sample Rates often claim better ears or experience in listening for subtle detail, while those who don’t perceive a difference claim that those who do are just influenced by the power of suggestion or expectation bias (if you expect to hear a difference, you will), and it’s all in their heads, not their ears. Double-and triple-blind listening tests often seem to bear this out, but there are always outliers that do seem to consistently hear what others don’t. Some skeptics suggest that the reproduction equipment (amps and speakers) used for listening to high-SR recordings may actually be suffering from very low-level intermodulation distortion artifacts from the recorded/reproduced ultrasonics, especially with 192 kHz recordings, and while this may be a subtly perceptible difference, and even subjectively pleasing (like many of the beloved analog distortions we sometimes miss with clean digital files), euphonious distortions are not really the point of hi-res audio, and just muddy up the waters. The bottom line is, even though we learn to trust our ears in the studio over spec sheets, we also need to be aware of when we shouldn’t necessarily trust our ears. We’re all subject to the potential pitfalls of poorly structured listening tests and expectation bias, so even though it’s important to keep an open mind, we don’t want to be too open to the power of suggestion, when it comes to evaluating the tools we use. In short, don’t buy into the hype—just stick to the facts.

Wrap-Up

Ultimately, people will continue to use whatever resolutions they feel are appropriate. Though pretty much all pro-level projects are 24-bit, the Sample Rates chosen range from the more traditional 44.1k and 48k to 96k, and sometimes even 192k (though some well-respected engineers feel that 192k is more hype than anything).

Sometimes the choice is made for you—the classical and jazz fields, with their acoustic sounds and high-end analog gear, have embraced 96/24. But with the often grittier sounds of rock, pop, or EDM styles, there’s more of a choice—some projects are done at 96/24, others stick to 44.1/24 or 48/24. I’d say, look around at the studio, the gear, the sources, and use your judgment.

© 2024 Ask.Audio

A NonLinear Educating Company

© 2024 Ask.Audio

A NonLinear Educating Company

Discussion

I have actually only done the first part with most of my (soft) synths and have been surprised how well it sounds with synth sources at at 88..2 in a 44.1 project.

You're right, software instruments are one application where oversampling can potentially make a more noticeable difference—especially with creative applications that involve more extreme sample manipulation, which can sometimes clearly benefit from higher sampling rates. I didn't get into that for two reasons—the article was intended to be focused on playback/distribution more than processing, and it was already long and technical enough (especially before I edited it down!).. ;-)

As I've always understand it, most plug-ins that oversample (for maximum clarity with certain kinds of intensive processing, like some modeling emulations) will be fine doing that internally (if you enable it), regardless of the session settings..

I also didn't get into the whole issue of people listening to all those pristine hi-res audio productions as MP3s/MP4s, but I guess that's an issue for another day.. ;-)

Cheers,

Joe

Want to join the discussion?

Create an account or login to get started!