Interview: Keith McMillen on Tech, Synths & Multi-dimensional Music Controllers

Hollin Jones on May 29, 2016 in Interviews | 0 comments

AskAudio: Can you tell us something about your background and how you got into the field of music technology?

Keith McMillen: When I was a kid, I was fascinated with anything related to musical instruments or anything that made sound. It wasn’t until I was about 15 that they discovered I was heavily nearsighted and hadn’t been diagnosed. So my world was like a sphere around me and I think that’s one reason my interest in audio was so keen. My family was very musical so I started playing guitar and messing around building amps, trying not to kill myself! At University I studied acoustics and that gave me a very solid background in the physics of audio, as well as engineering and performing skills. That was in Illinois but I moved out to California and started a company called Zeta Music. We designed, built and sold electric violins which became pretty much the standard, they’re still in production and that was the late 1970s.

I also designed the first programmable mixer in the world, the AKAI MPX820

That was really enjoyable to work on, but I also really wanted to have guitars be able to control synthesis and I started that research back in 1979. It’s a really big challenge but I knew that it could be done. Zeta was purchased by Gibson guitars. When I was at Zeta, I also designed the first programmable mixer in the world, the AKAI MPX820, I licensed it to them. I thought that computers definitely had a future and I wanted to find a way that musicians could be connected via networks on stage and have the performer affect the score, the instruments affect each other and that’s a passion that I still feel strongly about.

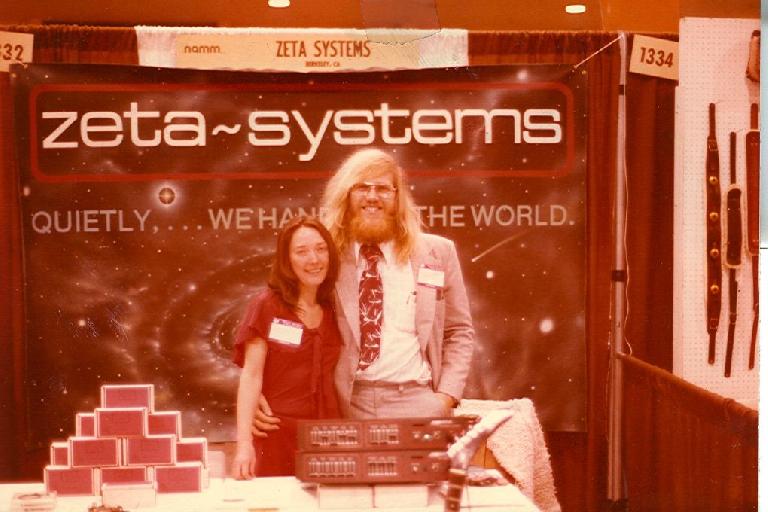

caption: 1st NAMM for Keith McMillen.

Ask: What was your role at Gibson?

KM: I was VP and chief scientist for about five years and did some work with Harmon, all the while continuing my experiments with networked musicians. I started another audio company in 2000, sold it and was able to play music freely for about three years off the proceeds. I had a trio and we travelled around with a lot of equipment to show how networked musicians would sound. The score would move along to a certain point and then it wouldn’t progress until the violinist played a specific phrase, or the timbre from the bass would affect the timbre of my guitar. You listen like never before when the sound of one instrument will actually change the nature and sound of your own instrument.

Ask: And how does that actually happen, in terms of the equipment and the technology?

KM: I was able top produce accurate MIDI output from each of the instruments: a guitar, violin and upright bass, all of which I had built, and their polyphonic audio was brought into a computer along with some outboard processing gear. The computer had a bunch of program I’d written and it would present scores, and at certain points it would trigger different processing of sounds based on specific conditions. The score could modify us and we could modify the score, and it added a whole new layer to the performance.

The synthesizer was going from being this “new” thing to becoming a valuable musical tool, and it meant that you didn’t have to bring a whole bunch of things like pianos to your shows. Part of my work is to create what I call a new system of integration and I have a non-profit organization that’s dedicated to keeping scores alive: you download some software and write with it. So even if the technology used in the original score moves on, the essentials of what the composer intends and the methodologies on how to accomplish this are still alive. Just like you can open a Word document from 20 years ago, it’s quite possible to have a computer platform with a musical score that will move forward in the future, to encourage artists to explore.

Ask: Tell us about KMI, your current company.

KM: I started KMI in 2007 to produce smaller instruments and to improve the relationship between acoustic generating instruments and electronic music and synthesis control. I just kept building the things I needed and wanted! Being in the Bay Area there are so many people who have both technical and musical skills and I hired some great people, and that just continued to grow. Now we have about 30 people and we keep continuing to create instruments that we find necessary and interesting, the most interesting being the K-Mix.

Ask: That looks really interesting—the multi-functional nature of it and the fact that it operates without a computer.

KM: If you’re gonna make something you may as well make it well, and make it useful to as many people as possible. When I travelled making music, the only ones who made money were the airlines and the chiropractors! Everything has to fit on a carry-on because musicians hardly get paid at all. It’s liberating to be able to set up quickly and focus on the performance and the audience and then be able to break it down and be gone in 15 minutes instead of an hour and a half. Especially in the guitar world, that seemed not to have happened. Guitarists seemed to be happy with a couple of stompboxes but the styles of music you can make with that stuff was pretty much codified in the '60s and '70s. I don’t hear much new music made with that stuff. So I wanted stringed instruments to be more modern in terms of what they can achieve sonically.

The keyboard made sense at the start for synthesizers but if you’re a string player you get all these other things that aren’t necessarily notes: percussion, bow noise and so on, and all of that needs to be part of the control path as well.

Ask: How limiting is MIDI as a protocol when you’re addressing things like that? I read that you did some work on what would become OSC as an alternative to MIDI. Presumably looking for a way to do things that MIDI couldn’t do?

KM: It seemed like back in the early '80s it would have been a good time to have a MIDI 2.0 but for reasons that were out of my control, the IP associated with what I was working on at the time became contentious and the window kind of closed on that. OSC is great but it didn’t really accomplish one of my intents which was to have the control messages be expressed in acoustical terms. Brightness, roughness, noise vs. harmonic components, violinists bouncing their bows on the strings: these things are very difficult to transmit. MIDI is still very capable of transferring information over a digital connection, but it’s not perfect. It’s going to have a long life but I wish we could have more definition so the musicians aren’t so frustrated by having to set up bend range to match what happens on their guitar, for example.

MIDI is still very capable but it’s not perfect. I wish we could have more definition.

Ask: What struck me watching videos of the K-Board Pro was that the way the user interacts with it is a little bit like playing a Hammond organ. Not literally the same movements but you’re using multiple controls while you’re playing the keys.

KM: Your analogy is perfect: imagine the drawbars being the actual keys themselves. People understand these motions but they’ve not been nicely integrated and sound designers haven’t really mapped them—although that’s changing. I have hope that there will be more instruments that will support these kinds of things. The processing power we have available to us is phenomenal but the controllers aren’t yet fully realized. Historically, the technology has always preceded the new music.

Ask: You’ve been involved with developing some really interesting technology over the years and continue to do so. What do you think might be the next big thing in music technology? Do you have any predictions about the wider music technology world?

KM: I’m not entirely without hope. I don’t see much at trade shows that I’d consider all that innovative. It’s a chicken and egg problem: musicians have been formalized in terms of what they want to produce by what they listen to and the business of music has reduced the variety of things people are exposed to. And so their demands are not challenging. I don’t imagine that will last forever though. One good thing that seems to be a theme is convenience. I hope that musicians will come to embrace equipment that is more innovative and powerful. I think we have everything we need in terms of instruments but it’s a matter of cleverly understanding more of the human gesture and being able to send that to software and have it work right away. Some standardization on what this gesture does to a synthesizer isn’t too much to ask, and it is happening. The cost of multi-dimensional controllers is coming down as well which will help. It’ll take a while but we’ve been spoiled by how easy it is to have a multichannel recorder live in our pockets. The technology is ahead of our tastes! It will change but not very quickly.

Web: https://www.keithmcmillen.com

Read our review of the K-Mix: https://ask.audio/articles/review-keith-mcmillen-kmix-audio-interface-digital-mixer-control-surface

© 2024 Ask.Audio

A NonLinear Educating Company

© 2024 Ask.Audio

A NonLinear Educating Company

Discussion

Want to join the discussion?

Create an account or login to get started!