For those not familiar with AES conventions, they differ from traditional audio trade shows like Musikmesse and NAMM in that academia takes front and center. There are workshops, tutorials, demonstrations and lectures, as well as technical committee meetings and over 150 presenters—students, scientists, programmers, and researchers—presenting their published academic papers on a wide array of audio topics.

For the Paris convention this year a Professional Sound Expo (PSE) was added for the first time, with over 130 exhibitors taking part.

The PSE also held a series of lectures and tutorials given by leading audio experts and representatives from various manufacturers—twenty-five tutorials alone, covering microphones, acoustics, sound quality in digital audio interfaces, binaural recording techniques, and much more,

I focused mainly on areas of my own expertise and interest, music production and sound design, acoustics, loudness standards, and immersive audio.

Immersive Audio

One word sums up the AES Paris convention: immersion.

From practical demonstrations to high-level academic abstraction, the hot topic was 3D audio and all the issues and solutions around its recording, coding, design and implementation.

This year’s keynote lecture entitled, “The Immersive Audio Revolution: From Labs to Mass-Market” was given by leading 3D Audio researcher Rozenn Nicol, from Orange Labs. She talked about how cost-effective technological developments have made it commercially viable for virtual reality to reach the individual, and gave a comprehensive overview of both the past and future of 3D audio.

There were various workshops with panels of experts, from sound designers to programmers, presenting prototype sound design software tools, SDK packages for Unity, and practical workflows for mixing and rendering 3-D sound for VR. There were lectures on different production techniques for creating immersive mixes such as Ambisonics processing and Head-Related Transfer Function rendering, and practical demonstrations of the results.

Editor's Note: We actually reviewed an early version of Particles. Read our review here.

Binaural sound, along with Ambisonics (multi mic/multi-channel recording and playback technology developed in the late '60s), have both long been on the periphery of audio, with no real world applications, but not anymore. They have both come to the forefront in the last few years, with major investment from both academic and commercial entities. The BBC has made vast steps towards improving the binaural experience, and large industry players are using ambisonics for their professional applications. The Sony Playstation team uses ambisonics for the audio engine of the PlayStationVR, and Google plans for ambisonics to be the 3D Audio format for YouTube360.

There are many issues to overcome, but one thing is for sure, realistic spatial audio is on its way to mass market, and make no mistake, it isn’t a gimmick like 3D cinema and TV turned out to be. Although it is still in its early phases, 3D audio is incredible when done well, and all the attention it is getting is well justified.

Audio Production: Streaming Loudness vs. Loudness Standards

Putting the immersive audio experiences aside, there were other topics of great interest, especially for modern music producers, namely the quality of music streaming services and a drive to have them adopt a uniform loudness standard like TV.

TV has been the first front to fall in the “loudness wars” as broadcasters around the world have come together to agree on optimum loudness levels for media content. The beauty of the loudness standards is that they take us back into the realm of delicious dynamics and have, at their core, an ear on audio quality.

Unfortunately, streaming services seem to be all over the place regarding levels and playback quality. This is an issue because streaming is rapidly becoming the preferred way to consume not only music but broadcast type content too.

One lecture, aptly titled “Screaming Streaming” showed empirical evidence of the artefacts being introduced by online streaming companies due to the compression codecs they are using, and how some services are even adding actual radio-style compression and limiting over and above lossy compression—adding even more distortion and noise.

The presentation was quite convincing.

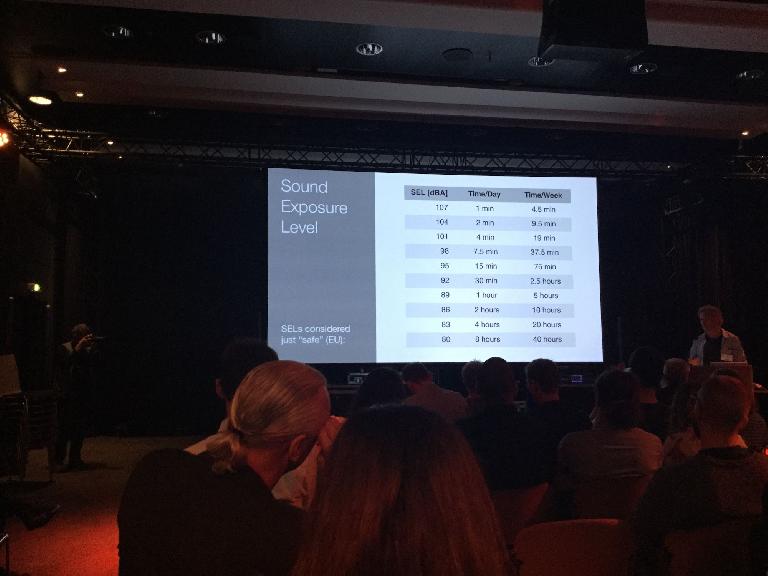

Another drive behind implementing the standards are health and safety issues.

Hearing damage is a major concern as more and more people, young and old, turn to their mobile devices and streaming services to listen to music. There are some measures to mitigate levels, iTunes soundcheck, and built-in volume control limits on mobile devices, but their implementation is more often than not compromising the quality of the audio, and people are still listening at unhealthy levels.

The loudness standards adopted by TV are a very important victory in the war on loudness and hopefully we will hear the music industry, labels, producers mastering engineers and streaming services adopt something similar.

Acoustics: Dealing with Issues in Small and Odd-Shaped Spaces

Acoustics lie at the heart of audio, not only in researching how we hear, but also in how to solve and improve inherent acoustical problems in systems as small as measurement tools and mobile devices, to systems as large as theaters and stadiums.

Of the many talks and papers on acoustics, two areas are of particular interest:

On the practical side there is continuing research into the issues of acoustics in small spaces, how to analyze low frequency data more accurately, and how to develop cost-effective ways to address them. One researcher, for example, is doing case studies on a number of actual small rooms, measuring and analyzing them using new methodologies, and publishing the results for others to learn from.

On the theoretical side, there is work on how to mathematically model resonant frequencies in non-shoebox shaped rooms, a problem many budding project acousticians have run into—most room-mode calculators and modelling systems assume an ideal shoe-box shape in the math itself, which is a problem since many bedrooms are not so straightforward. There is no time to go into details, but the results are quite promising, hopefully we will soon see online room-mode calculators able to predict modes in our funny shaped bedroom studios.

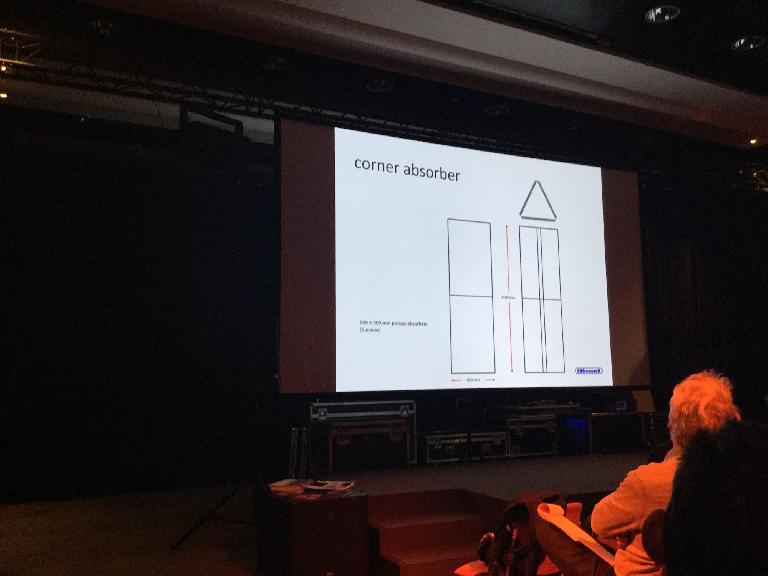

Researching and modeling acoustics in small spaces are increasingly relevant now that more and more people are making music in spaces never designed for it, and the overwhelming consensus, from audio manufacturers and acousticians alike, is that the importance of acoustic treatment is greatly underestimated. Consumers spend too much of their often limited budget on gear, but are not treating the space they hear it in, defeating the point of investing in quality equipment.

The good news is that it really is possible to treat a room on a small budget and get excellent results with careful speaker placement, basic measurements, and accurate placement of absorbers and diffusers made from the correct materials.

Active Acoustic Bass Traps

To be honest, even after all the immersive, 3D audio, and binaural “experiences” I took part in, this is the one product that truly had me say “wow” out loud, and it was arguably the most straightforward technology at the whole fair.

The AVAA—Active Velocity Acoustic Absorber by PSI Audio looks like a cross between a speaker and a bass trap (because it is both), and what it does is incredible.

It is designed to absorb standing room modes just like a passive absorber, but more efficiently, and taking up less space. The AVAA has the same effect, on frequencies from 15 to 120 Hz, as opening a window about five to ten times its size.

It works by having a microphone measure the acoustic pressure in front of an acoustic resistance. The acoustic resistance is designed to let air through but reduce the pressure significantly. A transducer membrane is driven to absorb the volume of air going through the acoustic resistance. This significantly reduces the acoustic pressure (between 15 and 120 Hz) around the microphone and around the AVAA.

I was invited to a demo and was very skeptical at first because as an armchair acoustician, I’m well aware how difficult it is to control the low end frequency range—even top-level studio designers spend a great deal of time tweaking down here, but what I heard was convincing enough that I immediately requested a more in-depth demo of the product. More on that soon!

Virtual Reality Mobile Head Tracking

There are a lot of inherent issues with binaural and immersive technologies at the moment, too technical to go into now, but suffice it to say, 3D immersive audio is not quite “there” yet.

One simple problem facing immersion with binaural media is that in order to experience the sound realistically you have use headphones (for now), and you have to sit quite still. As soon as you move your head the illusion that you are in a 3D space is lost, because the whole sound stage moves with you. In virtual reality this is unacceptable, visual cues inside the headset allow you to look around so the sound has to follow, or more accurately NOT follow your head.

This issue has largely been solved by head tracking of course, but even that has been difficult to implement realistically.

The major problem is that our own heads act as a unique-to-each-of-us pre-filter of all the sounds that arrive at our ears – especially source-location-specific audio information arriving at each ear at different times – a phenomenon known as a Head Related Transfer Function (HRTF). When a dummy head (or anyone else’s head) is used to record a binaural signal, our HRTF information is missing during playback in headphones, so the recording sounds off somehow.

With normal binaural playback it is common for listeners to experience sound as if it is happening off to the side and behind the head; i.e., not immersive at all, and this is a result of the HRTF problem discussed above. It really is weird, and I must admit it left me feeling quite underwhelmed with the whole binaural/3D revolution that was taking place all around the convention.

That is, until I tried it with head tracking.

Holy expletive.

Even without a personalised HRTF, once head tracking is involved, the binaural/3D audio experience literally takes on a whole new dimension, it is like a turbo charger kicking in – in every direction. For the demo the 3D space is a virtual 5.1 speaker environment, but depending on device resources hundreds of sounds, and directions, are possible. Also, once a device is strapped into VR goggles, like Google Cardboard, the phone itself can act as the head tracker with information gleaned from it's accelerometer.

You have to experience it to believe it.

It is clear from this demo why there is so much time and energy being invested in this area of audio - it is literally a game changer. And the beauty of the current state of accessibility to technology, coupled with research and testing at all budget levels, is that small developers are running neck and neck with larger companies to find a solution, and may be beating them in some areas already.

Spatial and 3D Audio Software

On display were some pretty amazing developments in spatial plug-ins and beta software environments.

The word on everyone's lips was Nugen Audio—absolutely astounding stereo and loudness tools that are just short of magic. Google also showed their free 3D audio SDK for Unity, and the demo was very impressive. For sound design, new developments in software for immersive design and mixing were on the show, each with exciting applications in both gaming and cinema production. Including the ability to add and manipulate thousands of sound “particles” in one environment and have them behave in very realistic ways, with an impressive amount of control.

Auro 3D, had a huge presence with their multi-channel, multi-speaker technology, and while most demos were a bit on the gimmicky side, one or two sound designers really did show the advantages of adding the extra “height” layer to a standard 5.1 surround set-up. Auro 3D 10.1 adds five channels 30º above the standard 5.1, plus a “Voice of God” speaker 90º directly over the audience.

As with all multichannel systems processing power is a hindrance, as is developing codecs to make sense of the multi streams of audio once they are bottlenecked into lower channel counts or plain old stereo.

But the possibilities are tantalizing, and once the spatial tools become more widely available some very interesting audio will emerge.

Conclusion

I haven’t even scratched the surface of all the great lectures and papers that were presented, or all the great software being developed. I often had to flip a mental coin to decide which of two overlapping lectures to attend, not to mention finding time to go and drool on some high-end gear.

The AES Conference on Audio for Virtual and Augmented Reality, being held September 30 and October 1, 2016 as an independent event, co-located with the 141st AES Convention, at the Los Angeles Convention Center will be all about audio for virtual and augmented reality—and if you have half a chance to attend, do not hesitate to go.

Visit AES website here.

© 2024 Ask.Audio

A NonLinear Educating Company

© 2024 Ask.Audio

A NonLinear Educating Company

Discussion

Want to join the discussion?

Create an account or login to get started!