Nowadays most people probably turn to virtual synths and samplers when electronic instruments are called for - Logic in particular comes with an impressive collection. These are simple to use: just instantiate the plug-in, choose and tweak the sound if desired, and they’re ready to play. But many people also incorporate external hardware synths and samplers into their workflow and that can be a little more complicated to set up, especially for those used to working entirely inside the box. Here are a few things to keep in mind when working with external instruments.

The Hookup

The initial setup of an external instrument may require parallel connections, depending on whether the instrument can be played from its own built-in keyboard (or set of drum pads), or if it’s a rackmount or tabletop module that’ll need to be triggered from a separate MIDI keyboard or controller. Let’s look at the latter scenario first.

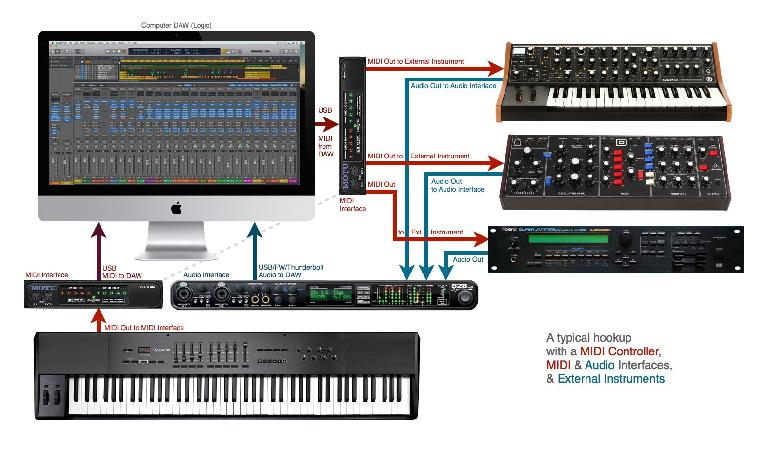

Integrating an external sound module and separate keyboard into a DAW rig requires two parallel connections - the audio that’ll carry the sound into the computer, and the MIDI connection from keyboard/controller to the module. If there’s only one keyboard and instrument involved, then this is simple - MIDI out from the controller to the module, and audio out from the module to an audio input on the DAW interface. But if there are multiple external instruments in the setup, all to be played from the same keyboard, then the hookup may be done a little differently.

The user is not going to want to keep re-patching the controller into each different instrument, so if the instruments all have traditional MIDI connections, the typical approach would be to utilize a MIDI interface with multiple MIDI Ins & Outs. A MIDI Out from the keyboard would be sent into a MIDI In on the MIDI interface, and parallel MIDI Outs from the interface would be hooked up to each of the hardware instruments. The MIDI interface would be connected to the computer/DAW via the usual USB connection. Then the user should be able to route all MIDI performance data through the DAW, selecting either internal instrument plug-ins or external instruments to be played, from that one central location.

The Sound

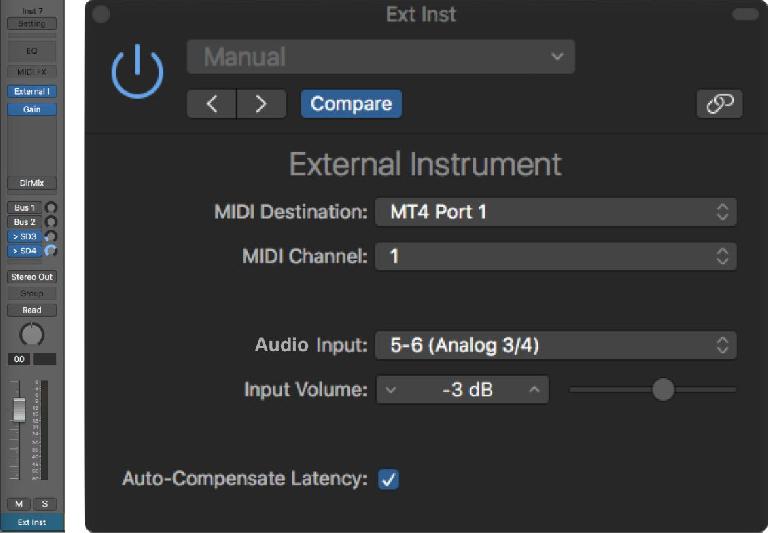

The other half of the external instrument hookup is the sound. Most external instruments will have regular audio outputs (mono or stereo, sometimes multi-outs), and these need to be plugged into inputs on the audio interface to bring each instrument’s sound into the DAW. In most DAWs these audio inputs would then be used as the sources for corresponding Aux input channels. Logic goes a step further, with an option that incorporates both the MIDI and audio connections into a single channel strip - the External Instrument plug-in, which is instantiated in an Instrument track. This plug-in directs the flow of the outgoing MIDI performance data (port & channel) and receives the incoming audio from the instrument, bringing it into the mixer (with a handy trim control to assure appropriate input level).

Once each external instrument is set up to receive MIDI from the controller (through the DAW) and send audio back into the DAW (to an Aux or Ext Inst channel), then the hookup is complete. Depending on exactly how the connections are made and routed in the DAW, the user can record only the MIDI performance, only the audio, or sometimes both. I’ll come back to that, but there’s one more hookup issue I need to mention first.

Local Off

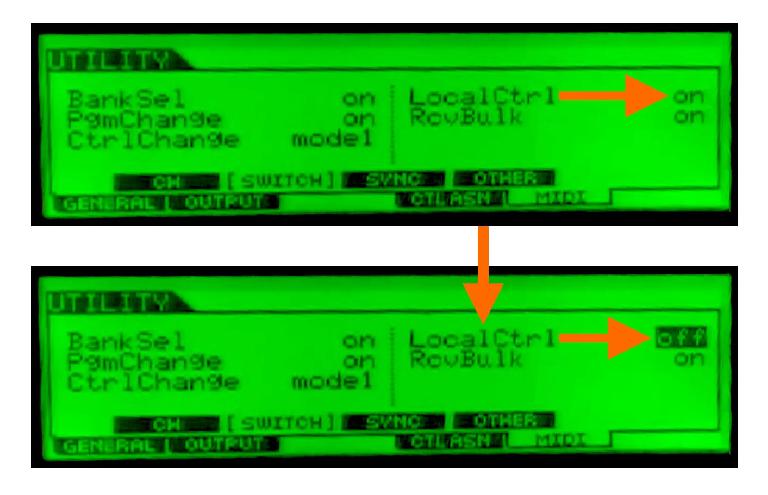

If the keyboard/controller being used also has its own internal sounds, that introduces another wrinkle to the setup. Notes played on that keyboard will automatically trigger the internal sounds, and while that’s convenient if that device is the only external instrument in use, if that keyboard needs to be used to trigger both its own sounds and sounds from other external modules then there’s a problem.

If the MIDI out from the keyboard is routed through the DAW, then each note will be double-triggered - once from the internal MIDI/instrument connection, and again from MIDI through the DAW, creating an unpleasant flanging-type effect. And if another instrument is selected, the keyboard would normally play both the other instrument and its own internal sounds. Of course, its own audio outputs could be muted/silenced, but those sounds may already be in use, playing audio from another already-recorded MIDI track.

The best solution is a MIDI setting that normally must be made on the external keyboard/instrument itself - Local Off. All MIDI instruments will have this - it’s usually buried somewhere in the instrument’s MIDI/setup/utility sub-pages.

When Local Control is turned Off, the keyboard’s MIDI output is disconnected from its own internal sound engine. This effectively separates the instrument into an independent controller and sound module, making integration with the DAW more straightforward - the keyboard’s own internal sounds are accessed the same way as any other physically separate module. This should work without a hitch, and Local Off is commonly used to integrate a guest musician’s favorite keyboard into a studio’s DAW rig - just be sure to set it back to normal operation when you’re done.

In Use

Once any external keyboard and instruments are hooked up through the DAW, then the procedure for recording with them may feel pretty much the same as recording tracks with internal instruments, but there are significant differences. For one thing, whatever amount of output-only latency the player usually feels with that setup when playing internal instruments will be doubled - there’s now also input latency from the incoming audio connection. This won’t be an issue if the DAW buffers are set to a low value (128, 64 or 32), but it may be with higher settings.

When it comes time to record, typically you’d select/record arm the MIDI/Ext Inst track receiving the performance data from the keyboard, and it’s that MIDI data that gets recorded, not the audio from the external sound module. The audio will be heard during recording through the audio input in the External Instrument track (or an Aux, if desired). In playback, the recorded MIDI data will be sent out to that same external instrument, and the audio will still be heard as a live signal coming into the Ext Inst (or Aux) channel.

This is the main difference between playing back internal and external instrument performances - in both cases the MIDI data is what was recorded, not the sound, but as a plug-in the internal instrument will be saved as part of the Session or Project, while the external instrument will not, of course. For subsequent playback, the external Instrument will have to be set up again (re-hooked up to the same MIDI port(s) with the same sound loaded as it was during the recording), and the sound is always a live audio signal.

Limitations Of Live

Internal instruments that use samples are subject to a similar restriction - if the samples are missing, the track can’t play, so there’s an option to copy any used samples into the Project folder, to make sure the session can “travel” with all its necessary components intact. This is not an option with external instruments - one way to preserve the instrument sound(s) as heard during the recording is to record the audio from the sound module instead of the MIDI data. This will ensure the availability of the correct instrument sounds for future playback, even without the external module(s), but it does lock you into those sounds.

Alternatively, you could record both the MIDI data and the instrument sound (by splitting the incoming audio from the sound modules into a parallel audio track). Now you’d have both original sound and the MIDI performance, which could be re-directed to a different instrument at some point if desired (more on that in a minute).

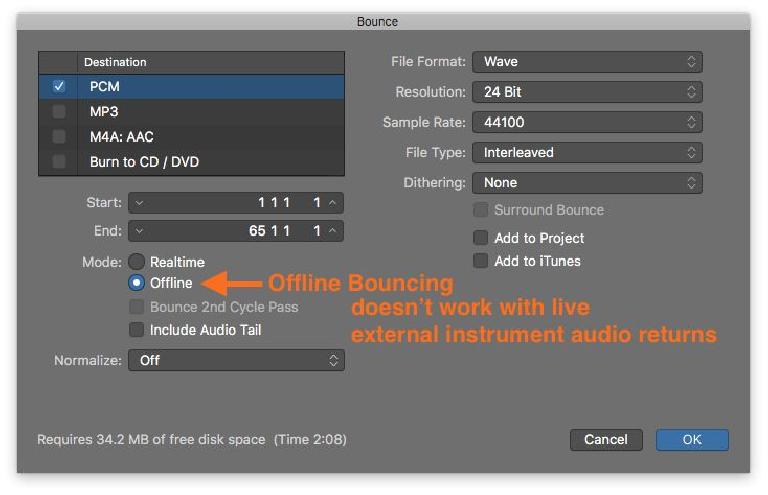

Even if you didn’t initially record the audio, you could always bounce down the live external instrument audio at any time. This brings up another limitation, though, one which is also true any time bouncing or exporting of tracks is done - since the audio from external instruments is a live input during playback, unlike the audio from internal instruments (which is generated inside the computer) you can’t bounce it offline (silently, faster than realtime) - any external instrument audio returns must be bounced in realtime.

This should only be a minor inconvenience, but if you’re, say, bouncing or exporting all tracks at once and external instrument returns are involved, you’ll either have to bounce everything in realtime, or make a second realtime pass for those tracks.

Rendering

The topic of bouncing - or rendering - external MIDI tracks to audio brings up a few additional questions. First, would it be better to record audio instead of MIDI when working with external instruments? Well, I’d say no, because while it does ensure the availability of the sound the player heard while performing (an important consideration), it also limits you to that sound. If future flexibility is a major consideration, I’d suggest recording both - the MIDI performance data (for normal live audio playback from the external module) and the audio itself (routed in parallel to an extra audio track). This would save time later (no real-time bouncing needed), and would offer the most flexibility down the road.

If you’re not worried about the availability of the external hardware instrument, is there any need to bounce or render the audio at all? Well, technically no, as long as you’re willing to perform the final bounce-to-disk in realtime rather than offline (a good idea anyway) - live-input external instrument tracks can be left that way to the end of the project. However, it’s still a good idea to render them as audio recordings at some point. Many people do it before they begin the Mix stage, but for others there’s no clear line of demarcation between tracking and mixing, and they want to maintain the greater flexibility of the MIDI recording/live playback mode of operation even while mixing.

I would suggest that at the end of every project, all external instrument tracks should be bounced/rendered to audio for archiving purposes. You never know when you’ll need to revisit a project, and who knows if those external sounds will still be available months or years down the road. You could be forced to substitute different sounds while trying to maintain the same sounds/same mix - a more daunting task without the isolated original tracks to go by.

Replacement

And that brings up one final issue - even if you plan to replace the original sounds with new ones, perhaps from a virtual instrument, it’s still a good idea to have an audio recording that preserves the sound the player heard during the performance. Replacing one instrument with another is not always as simple as it sounds, and it may turn out to be critical to have the original sound, that shows all the dynamic variations and other performance nuances the player intended, as a reference when substituting another instrument to be triggered by that MIDI performance. Otherwise, even if the new instrument has an inherently better sound quality, without a guideline to setting up velocity curves and other aspects of MIDI response, the track may just not sound right from a musical standpoint.

Wrap Up

All this discussion may make it seem like using external instruments can be more trouble that it’s worth, but once you get used to the setup requirements and limitations, it not that difficult - if the perfect instrument sound for project happens to be sitting in a hardware box, then jumping through a couple of hoops is a small price to pay for perfection.

© 2024 Ask.Audio

A NonLinear Educating Company

© 2024 Ask.Audio

A NonLinear Educating Company

Discussion

Want to join the discussion?

Create an account or login to get started!